Train Digital Assistant chatbot with Utterances

Adenin's Digital Assistant chatbot has built-in intent matching technology called 'Utterances', which allow you to build sophisticated natural phrases that can trigger and suggest Cards when users are searching. For example, let's assume we want to create a Card that displays the manager of an employee when we search for it:

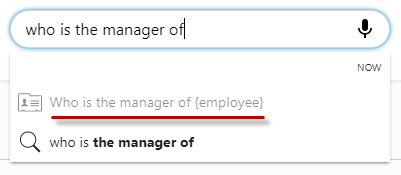

By beginning to type the utterance 'who is the manager of' search has recognized a potential match between this Card and the user query and subsequently triggers a suggestion in the dropdown.

With the argument in the {curly braces} the suggestion signals to the user that, although there is a match, it is expecting some other kind of input here before it can send the search off. This argument is called an 'entity'. In our example the suggestion would remain grayed out until we complete the sentence, e.g. 'who is the manager of John Smith'. Then search has captured 'John Smith' as the search entity and the suggestion becomes a valid selection.

Unlike traditional AI engines, this approach is multi-lingual. You can write Utterances in any and multiple languages.

Write training data using Utterances

There are 3 main utterance operators that you can construct your utterance commands with:

- Paranthesis

Where is (my) video?: This is an optional inclusion and will show the associated Card in search even if the word 'my' in not present in the phrase. - Pipe symbols

Where are the (manager|contractor|service provider) files?: This allows the user to use either word in the paranthesis to get the same result. So search treats 'manager' and 'contractor' and 'service provider' as being the same word. At least one of the alternatives must be present in the query. - Curly braces

What is the weather in {country}?: This is used for data inclusion, which - as seen earlier - uses an entity inside the phrase to push a search term to the API service for this Card.

Utterances are associated with Cards and in general should be kept unique. This means that if two Cards have exactly the same utterances, both Cards will be shown in search results and in the typeahead suggestions.

Data inclusion

If you have a Card that searches for local weather forecasts you might have the following utterance associated with it: What's the weather like in {city}. Therefore everything the user types in the curly braces, gets passed to the search service of this Card, which will have to be customized to return the necessary data for our Card, in this case a forecast based on the city that was entered.

Although the Services each Connector offers may differ, in general any Service that offers a "search" is suitable for data inclusion utterances:

The Connector and Service used by a Card can be edited in the Card's metadata screen (just like utterances, too).